Against Maxipok

Existential risk isn’t everything

This is a summary of “Beyond Existential Risk”, by Will MacAskill and Guive Assadi. The full version is available on our website.

Bostrom’s Maxipok principle suggests reducing existential risk should be the overwhelming focus for those looking to improve humanity’s long-term prospects. This rests on an implicitly dichotomous view of future value, where most outcomes are either near-worthless or near-best.

We argue against the dichotomous view, and against Maxipok. In the coming century, values, institutions, and power distributions could become locked-in. But we could influence what gets locked-in, and when and whether it happens at all—so it is possible to substantially improve the long-term future by channels other than reducing existential risk.

And this is a fairly big deal: both because Maxipok could mean leaving value on the table, and in some cases because following Maxipok could actually do harm.

Defining Maxipok

Picture a future where some worry that terrorist groups could use advanced bioweapons to wipe out humanity. The world’s governments could coalesce into a strong world government that would reduce the extinction risk from 1% to 0%, or maintain the status quo. But the world government would lock in authoritarian control and undermine hopes for political diversity, decreasing the value of all future civilisation by, say, 5%.

If we totally prioritised existential risk reduction, then we should support the strong world government. But we’d be saving the world in a way that makes it worse.

A popular view among longtermists is that trying to improve the long-term future amounts to trying to reduce existential risk. The clearest expression of this is Nick Bostrom’s Maxipok principle:

Maxipok: When pursuing the impartial good, one should seek to maximise the probability of an “OK outcome,” where an OK outcome is any outcome that avoids existential catastrophe.

Existential risk is not only the risk of extinction: it is more generally the risk of a “drastic” curtailment of humanity’s potential: a catastrophe which makes the future roughly as bad as human extinction.

Is future value all-or-nothing?

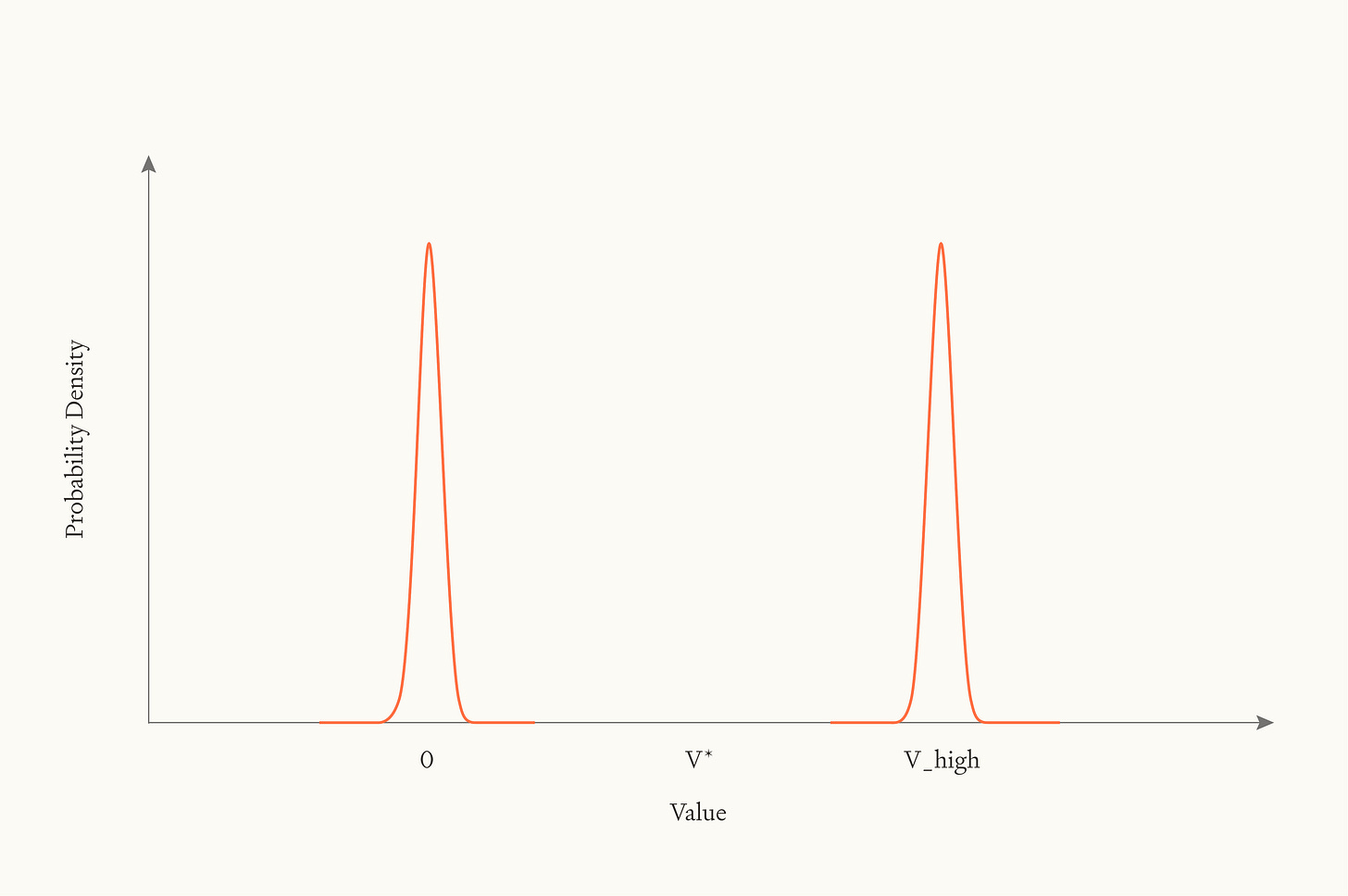

Why would this be true? We suggest it’s because of an implicit assumption, which we’ll call Dichotomy. Roughly, Dichotomy says that futures cluster sharply into two categories—either very good ones (where humanity survives and flourishes) or very bad ones (existential catastrophes). If futures really are dichotomous in this way, then the only thing that matters is shifting probability mass from the bad cluster to the good cluster. Reducing existential risk would indeed be the overwhelming priority.

Here’s a more precise definition:

Dichotomy: The distribution of the difference that human-originating intelligent life makes to the value of the universe over possible futures is very strongly bimodal, no matter what actions we take.

The case for Dichotomy

Maxipok, then, significantly hinges on Dichotomy. And it’s not a crazy view—we see three arguments in its favour.

The first argument appeals to a wide “basin of attraction” pointing towards near-best futures. Just as an object captured by a planet’s gravity inevitably gets pulled into orbit or crashes into the planet itself, perhaps any society that is competent or good enough to survive naturally gravitates toward the best possible arrangement.

But there are plausible futures where humanity survives, but doesn’t morally converge. Just imagine all power ends up consolidated in the hands of a single immortal dictator (say, an uploaded human or an AI). There’s no reason to think this dictator will inevitably discover and care about what’s morally valuable: they might learn what’s good and simply not care, or they might deliberately avoid exposing themselves to good moral arguments. As far as we can tell, nothing about being powerful and long-lived guarantees moral enlightenment or motivation. More generally, it seems implausible that evolutionary or other pressures would be so overwhelming as to make inevitable a single, supremely valuable destination.

The second argument for Dichotomy is if near-best futures are easy to achieve; perhaps value is bounded above, at a low bound, so that as long as future civilisation doesn’t soon go extinct, and doesn’t go a direction that’s completely valueless, it will end up producing close to the best outcome possible.

But bounded value doesn’t clearly support Dichotomy. First, there’s a nasty asymmetry: while goodness might be bounded above, badness almost certainly isn’t bounded below (intuitively we can always add more awful things, and make the world worse overall). But that asymmetry wouldn’t support Dichotomy; it would support a distribution with a long negative tail, where preventing extinction could generally make the future worse. That’s not the only issue with bounded views, but at any rate they’re unlikely to make Dichotomy true.

Here’s a third argument that doesn’t require convergence or bounded value. Perhaps the most efficient uses of resources produce vastly more value—orders of magnitude more—than almost anything else. Call the hypothetical best use “valorium” (whatever substrate most efficiently generates moral value per joule of energy). Then either future civilisation optimises for valorium or it doesn’t. If it does, the future is enormously good. If it doesn’t, the future has comparatively negligible value.

There’s some evidence for this heavy-tailed view of value. Philosopher Bertrand Russell wrote of love: “it brings ecstasy—ecstasy so great that I would often have sacrificed all the rest of life for a few hours of this joy.” One survey found that over 50% of people report their most intense experience being at least twice as intense as their second-most intense, with many reporting much higher ratios.

On the other hand, Weber-Fechner laws in psychophysics suggest that perceived intensity follows a logarithmic relationship to stimulus intensity, implying light rather than heavy tails.

But it’s hard to know how to extrapolate from human examples to learn things about the distribution of value on a cosmic scale. This Extremity of the Best hypothesis isn’t obviously wrong, but we wouldn’t bet on it.

The case against Dichotomy

Even if one of these arguments had merit, two more general considerations count strongly against it, such that we end up rejecting Dichotomy.

In the future, resources will likely be divided among different groups—different nations, ideologies, value systems, or even different star systems controlled by different factions. If space is defence-dominant (meaning a group holding a star system can defend it against aggressors, plausibly true given vast interstellar distances), then the initial allocation of cosmic resources could persist indefinitely.

This breaks Dichotomy even if the best uses of resources are extremely heavy-tailed. Some groups might optimise for those best uses, while others don’t. The overall value of the future scales continuously with what fraction of resources go to which groups, and there’s no reason to expect only extreme divisions. The same applies within groups. Individuals might divide their resource budgets between optimal and very suboptimal uses, but the division itself can vary widely.

Perhaps the deepest problem for Dichotomy is that we should just be widely uncertain about all these theoretical questions. We don’t know whether societies converge, whether value is bounded, whether the best uses of resources are orders of magnitude better. And when you’re uncertain across many different probability distributions—some dichotomous, some not—the expected distribution is non-dichotomous. It’s like averaging a bimodal distribution with a normal distribution: you get something in between, with probability mass spread across the middle range.

Non-existential interventions can persist

There’s one more objection to consider. Maybe we’re wrong about Dichotomy, but Maxipok is still right for a different reason: only existential catastrophes have lasting effects on the long-term future. Everything else—even seemingly momentous events like world wars, cultural revolutions, or global dictatorships—will eventually wash out in the fullness of time. Call this persistence skepticism.

We think you should reject persistence skepticism. We think it’s reasonably likely—at least as likely as extinction—that the coming century will see lock-in—events where certain values, institutions, or power distributions become effectively permanent—or other forms of persistent path-dependence.

Two mechanisms make this plausible:

AGI-enforced institutions. Once we develop artificial general intelligence, it becomes possible to create and enforce perpetually binding constitutions, laws, or governance structures. Unlike humans, digital agents can make exact copies of themselves, store backups across multiple locations, and reload original versions to verify goal stability. A superintelligent system tasked with enforcing some constitution could maintain that system across astronomical timescales. And once such a system is established, changing it might be impossible—the enforcer itself might prevent modifications.

Space settlement and defence dominance. Once space settlement becomes feasible—perhaps soon after AGI—cosmic resources will likely be divided among different groups. Moreover, star systems may be defence-dominant. This means the initial allocation of star systems could persist indefinitely, with each group’s values locked into their territory, other than through trade.

Crucially, even though AGI and space settlement provide the ultimate lock-in mechanisms, earlier moments could significantly affect how those lock-ins go. A dictator securing power for just 10 years might use that time to develop means to retain power for 20 more years, then reach AGI within that window—thereby indefinitely entrenching what would otherwise have been temporary dominance.

This suggests quite a few early choices could have persisting impact:

The specific values programmed into early transformative AI systems

The design of the first global governing institutions

Early legal and moral frameworks established for digital beings

The principles governing allocation of extraterrestrial resources

Whether powerful countries remain democratic during the intelligence explosion

Actions in these areas might sometimes prevent existential catastrophes, but they often involve improving the future in non-drastic ways; contra Maxipok.

So what?

We think it’s a reasonably big deal for longtermists if Maxipok is false.

The update is something like this: rather than focusing solely on existential catastrophe, longtermists should tackle the broader category of grand challenges: events whose surrounding decisions could alter the expected value of Earth-originating life by at least one part in a thousand (0.1%).

Concretely, new priorities could include:

Deliberately postponing or accelerating irreversible decisions to change how those decisions get made. This could mean pushing for international agreements on AI development timelines, implementing reauthorisation clauses for major treaties, or preventing premature allocation of space resources.

Ensuring that when lock-ins occur, the outcomes are as beneficial as possible. This might involve advocating for distributed power in AI governance (rather than single-country or single-company control), encouraging widespread moral reflection before lock-ins occur, or working to ensure humble and morally-motivated actors design locked-in institutions rather than ideologues or potential dictators.

Influencing which values and which groups control resources when critical decisions get made. This could mean promoting better moral worldviews, working on the treatment and rights of digital beings before those rights get locked in, or affecting the balance of power between democratic and authoritarian systems.

Building better mechanisms for global coordination, moral deliberation, and governance handoffs to transformative AI systems.

None of these interventions is primarily about reducing the probability of existential catastrophe. But if Maxipok is false, they could be just as important—or even more important—than traditional existential risk reduction interventions, like preventing all-out AI takeover, or extinction-level biological catastrophes.

None of this makes existential risk reduction less valuable in absolute terms. We’re making the case for going beyond a singular focus on existential risk, to improving the future conditional on existential survival, too. The stakes remain astronomical.

This was a summary of “Beyond Existential Risk”, by Will MacAskill and Guive Assadi. The full version is available on our website.

The main problem with Maxipok is that it assumes that we know how to reduce existential risk. This is clearly false given, for example, the two alternatives to reducing AI risk:

1) Try to stop or slow AI development.

2) Press on with AI development in the hope that we solve alignment before the bad guys create a badly aligned AI.

If we don't know which of these to pursue it renders most other discussion meaningless.

Suppose we focus on dealing with climate change - Maybe the optimal way to do that is to press on with AI development. Who knows?