Preparing for the Intelligence Explosion

Imagine all the scientific, intellectual and technological developments that you would expect to see by the year 2125, if technological progress continued over the next century at roughly the same rate that it did over the last century. And then imagine all of those developments occurring over the course of just ten years.

This is in fact what we should expect to see. And this acceleration may well begin before the decade is out.

A century in a decade

In a new paper, we analyse the impact of AI on the rate of technological development, arguing that a century of technological progress in a decade looks likely even under conservative assumptions.

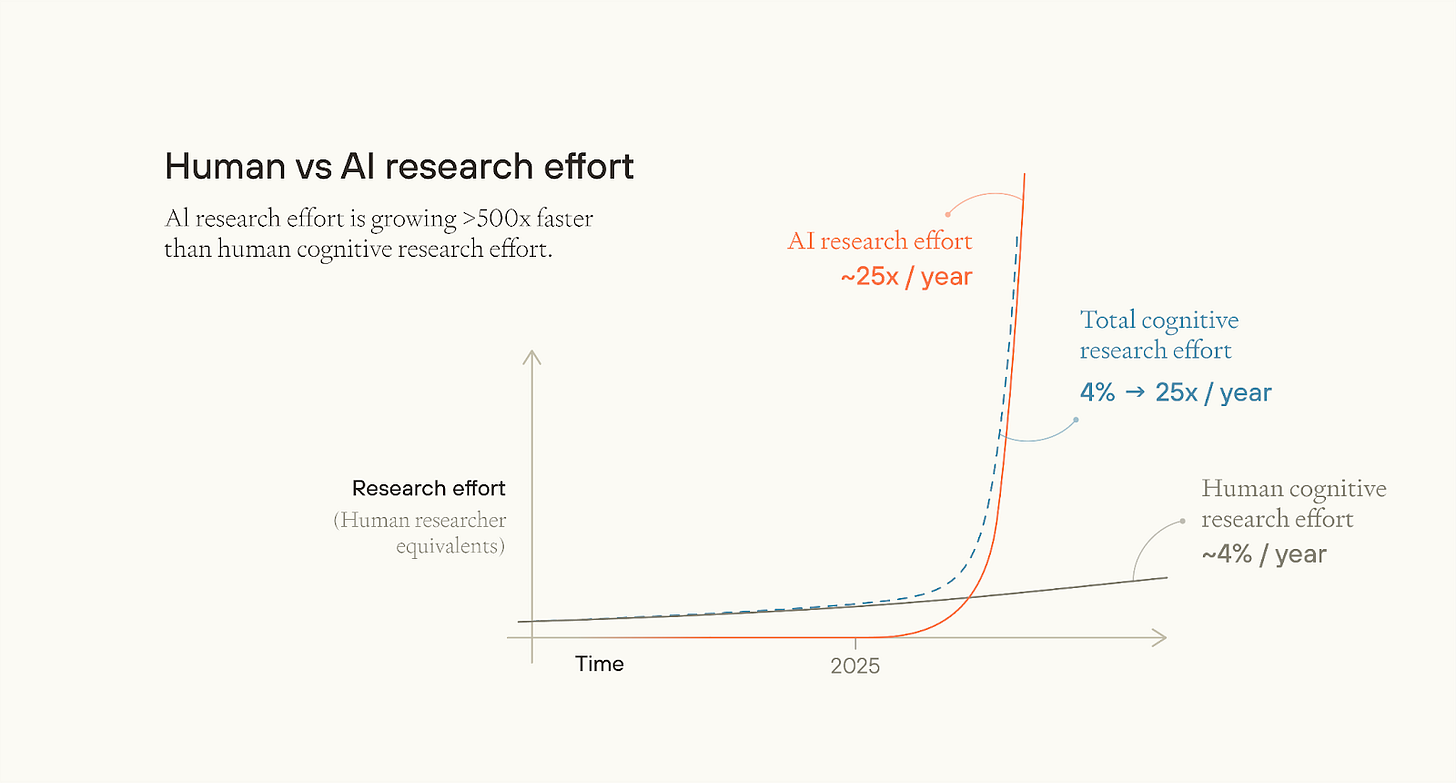

The cognitive labour from AI models is increasing more than twenty times over every year. Even if improvements slow to half their rate, AI systems could still overtake all human researchers in their contribution to technological progress, and then grow by another millionfold human researcher equivalents within a decade.

For business to go on as usual, then current trends — in pre-training efficiency, post-training enhancements, scaling training runs and inference compute — must effectively grind to a halt.

Once AI begin outshining human researchers, we could get many new technologies in rapid succession: new miracle drugs and new bioweapons; automated companies and automated militaries; superhuman prediction and superhuman persuasion.

The intelligence explosion could yield, in the words of Anthropic CEO Dario Amodei, “a country of geniuses in a data center”, driving a century's worth of technological progress in less than a decade. This accelerated decade would result in many new opportunities and challenges.

Not just alignment

Sometimes, those who expect superintelligent AI believe that outcomes are effectively all-or-nothing and depend on one challenge: AI alignment. Either we fail to align AI, in which case humanity is permanently disempowered; or we succeed at aligning AI, and then we use it to solve all of our other problems.

Our paper argues against this “all or nothing” view. Instead, we argue that AGI preparedness should cover a far wider range of opportunities and challenges, including:

The risk of human takeover, by whoever controls superintelligence;

Novel risks from destructive technologies, such as bioweapons, drone swarms, nanotechnology, and technologies we haven’t yet concretely anticipated;

The establishment of norms, laws, and institutions around critical ethical issues like the rights of digital beings and the allocation of newly-valuable offworld resources;

The challenge of harnessing AI’s ability to improve society’s collective epistemology, rather than distort it.

The opportunity to harness the potentially radical abundance that AI could bring, distributing it equitably, and enabling treaties that make everyone far better off than they are today.

Grand challenges such as these may meaningfully determine how well the future goes. They are forks in the road of human progress. In many cases, we shouldn’t expect them to go well by default — not unless we prepare in advance.

Because of the accelerated pace of change, the world will need to handle these challenges fast, on a timescale far shorter than what human institutions are designed for. By default, we simply won’t have time for extended deliberation and slow trial and error.

Aligned superintelligence could solve some of these challenges for us. But not all of them. Some challenges will come before aligned superintelligence does, and some solutions, like agreements to share power post-AGI, or improving currently slow-moving institutions, are only feasible if we work on them before the intelligence explosion occurs.

Preparing for the Intelligence Explosion

So we should start preparing for these challenges now. We can design better norms and policies for digital beings and space governance; build up foresight capacity; improve the responsiveness and technical literacy of government institutions, and more. The broader sweep of grand challenges looks alarmingly neglected.

Many people are admirably focused on preparing for a single challenge, like misaligned AI takeover, AI misinformation, or accelerating the economic benefits of AI. But focusing on one challenge is not the same as ignoring all others: if you are a single-issue voter on AI, you are probably making a mistake. We should take seriously all the challenges the intelligence explosion will bring, be open to new and overlooked challenges, and appreciate that all these challenges could interact in action-relevant and often confusing ways.

So the intelligence explosion demands not just preparation, but humility: a clear-eyed understanding of both the magnitude of what's coming and the limits of our ability to predict it. Careful preparation now could mean the difference between an intelligence explosion that empowers humanity and one that overwhelms it. The future is approaching faster than we expect, and our window for thoughtful preparation may be brief.