How Quick and Big Would a Software Intelligence Explosion Be?

AI systems may soon fully automate AI R&D. Myself and Daniel Eth have argued that this could precipitate a software intelligence explosion – a period of rapid AI progress due to AI improving AI algorithms and data.

But we never addressed a crucial question: how big would a software intelligence explosion be?

This new paper fills that gap.

Overall, we think that the software intelligence explosion will probably (~60%) compress >3 years of AI progress into <1 year, but is somewhat unlikely (~20%) to compress >10 years into <1 year. That’s >3 years of total AI progress at recent rates (from both compute and software), achieved solely through software improvements. If compute is still increasing during this time, as seems likely, that will drive additional progress.

The existing discussion on the “intelligence explosion” has generally split into those who are highly sceptical of intelligence explosion dynamics and those who anticipate extremely rapid and sustained capabilities increases. Our analysis suggests an intermediate view: the software intelligence explosion will be a significant additional acceleration at just the time when AI systems are surpassing top humans in broad areas of science and engineering.

Like all analyses of this topic, this paper is necessarily speculative. We draw on evidence where we can, but the results are significantly influenced by guesswork and subjective judgement.

Summary

How the model works

We use the term ASARA to refer to AI that can fully automate AI research (ASARA = “AI Systems for AI R&D Automation”). For concreteness, we define ASARA as AI that can replace every human researcher at an AI company with 30 equally capable AI systems each thinking 30X human speed.

We simulate AI progress after the deployment of ASARA.

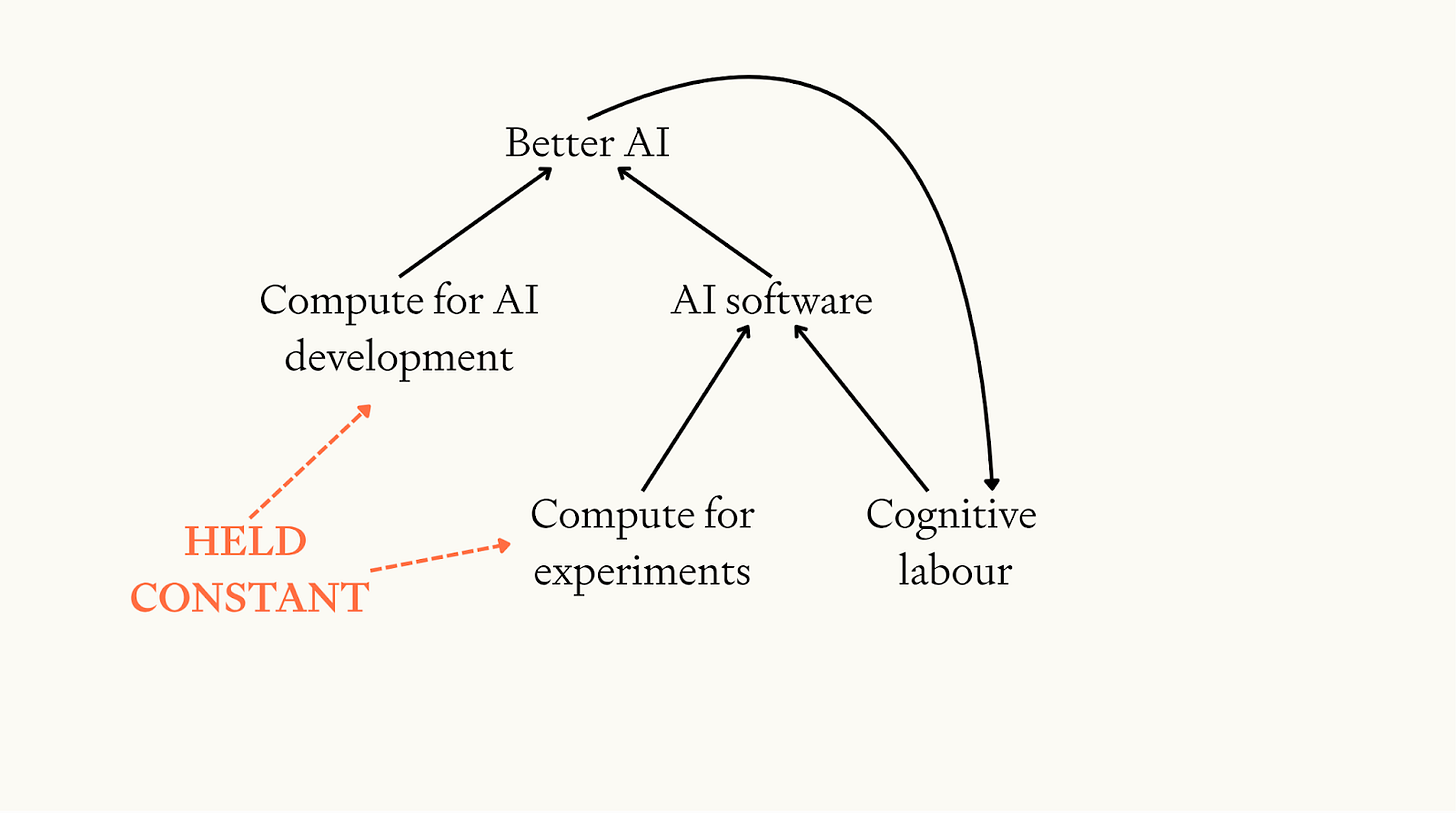

We assume that half of recent AI progress comes from using more compute in AI development and the other half comes from improved software. (“Software” here refers to AI algorithms, data, fine-tuning, scaffolding, inference-time techniques like o1 — all the sources of AI progress other than additional compute.) We assume compute is constant and only simulate software progress.

We assume that software progress is driven by two inputs: 1) cognitive labour for designing better AI algorithms, and 2) compute for experiments to test new algorithms. Compute for experiments is assumed to be constant. Cognitive labour is proportional to the level of software, reflecting the fact AI has automated AI research.

So the feedback loop we simulate is: better AI → more cognitive labour for AI research → more AI software progress → better AI →…

The model has three key parameters that drive the results:

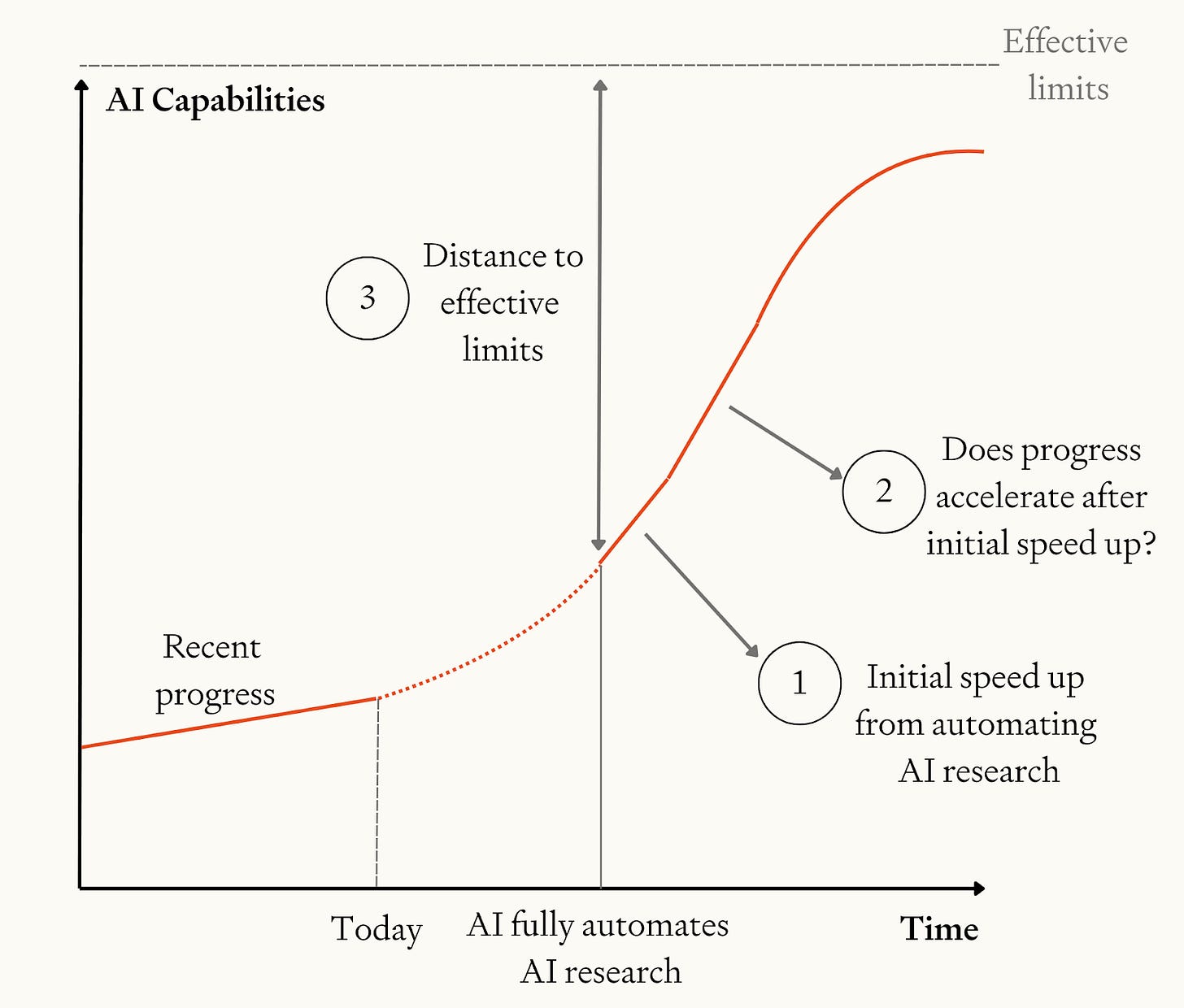

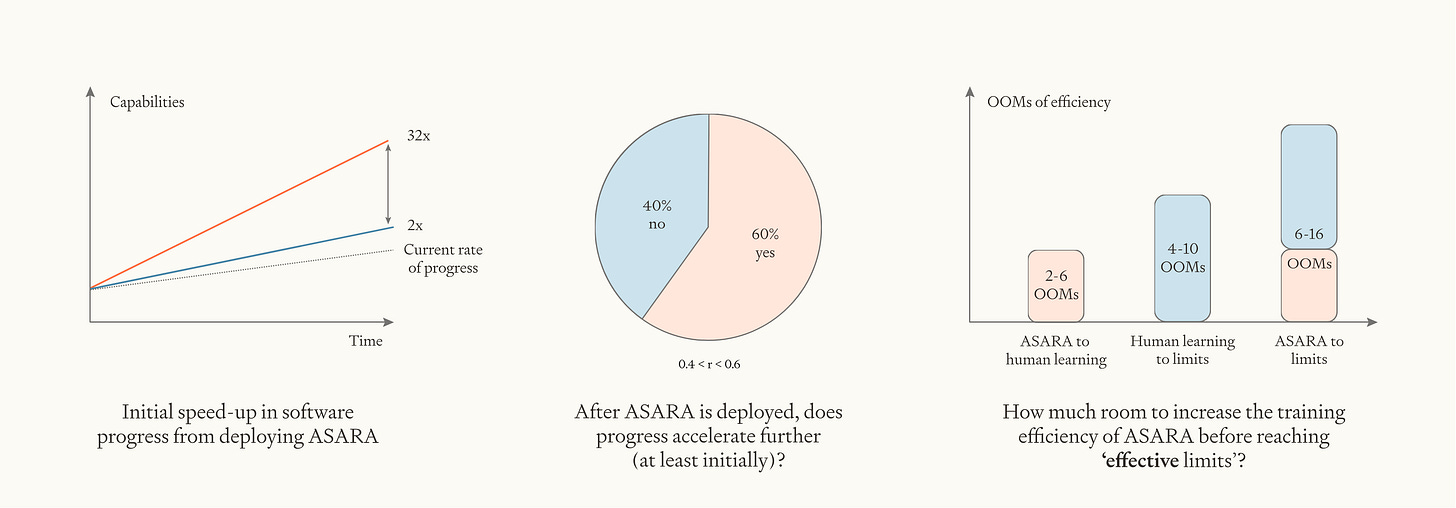

Initial speed-up. When ASARA is initially deployed, how much faster is software progress compared to the recent pace of software progress?

Returns to software R&D. After the initial speed-up from ASARA, does the pace of progress accelerate or decelerate as AI progress feeds back on itself?

This is given by the parameter r. Progress accelerates if and only if r > 1.

r depends on 1) the extent to which software improvements get harder to find as the low hanging fruit are plucked, and 2) the strength of the “stepping on toes effect” whereby there are diminishing returns to more researchers working in parallel.

Distance to “effective limits” on software. How much can software improve after ASARA before we reach fundamental limits to the compute efficiency of AI software?

The model assumes that, as software approaches effective limits, the returns to software R&D become less favourable and so AI progress decelerates.

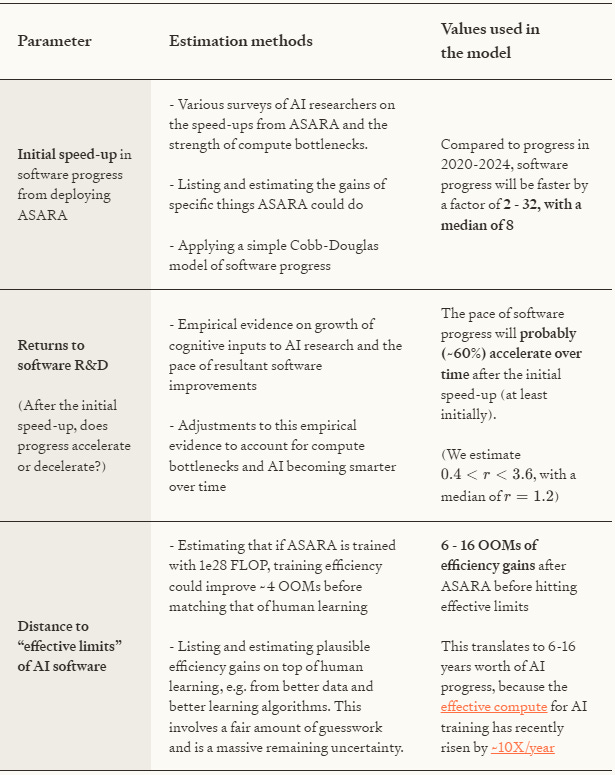

The following table summarises our estimates of the three key parameters:

We put log-uniform probability distributions over the model parameters and run a Monte Carlo (more).

You can enter your own inputs to the model on this website.

Results

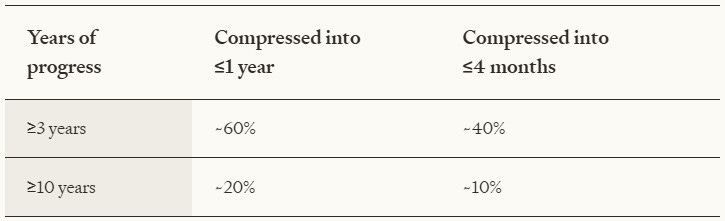

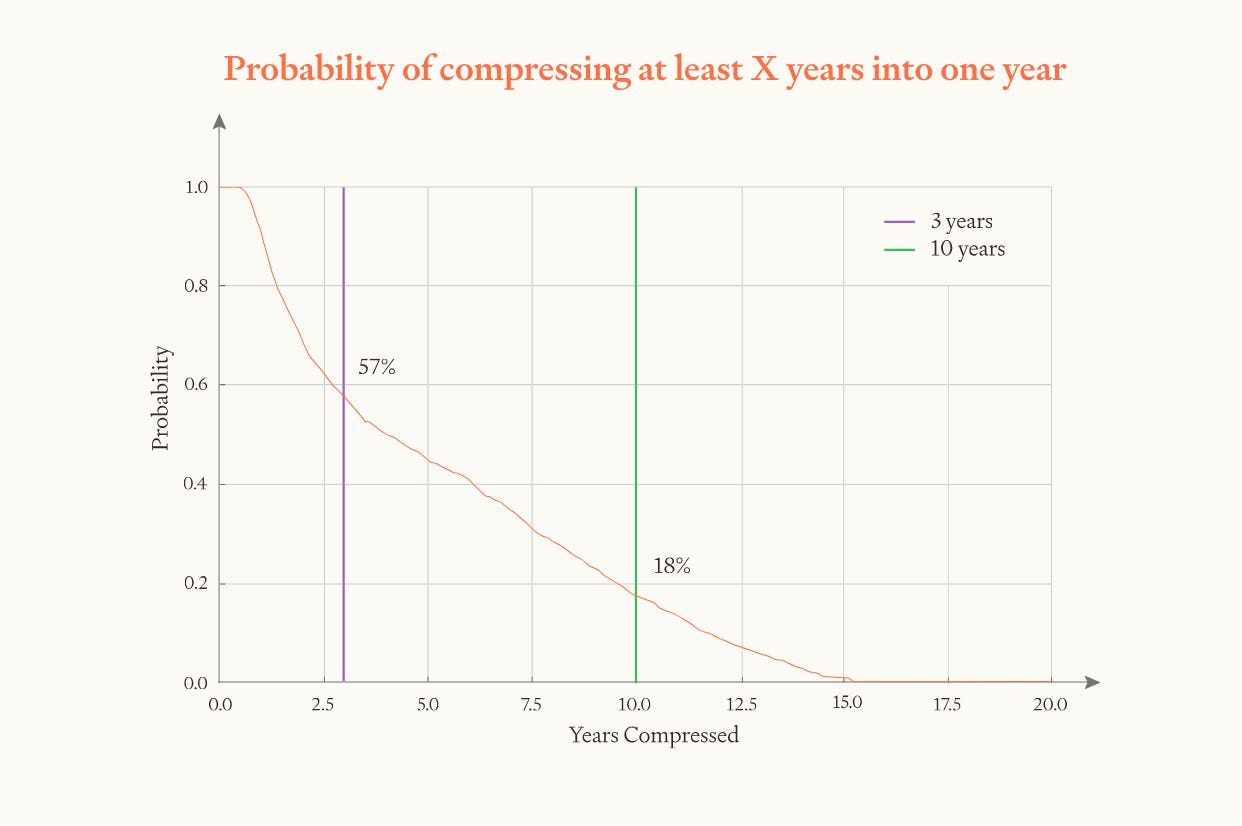

Here are the model’s bottom lines (to 1 sig fig):

Remember, the simulations conservatively assume that compute is held constant. They compare the pace of AI software progress after ASARA to the recent pace of overall AI progress, so “3 years of progress in 1 year” means “6 years of software progress in 1 year”.

While the exact numbers here are obviously not to be trusted, we find the following high-level takeaway meaningful: averaged over one year, AI progress could easily be >3X faster, could potentially be >10X faster, but won’t be 30X faster absent a major paradigm shift. In particular:

Initial speed-ups of >3X are likely, and the returns to software R&D are likely high enough to prevent progress slowing back down before there is 3 years of progress.

If effective limits are >10 OOMs away and returns to software R&D remain favourable until we are close to those limits, progress can accelerate for long enough to get ten years of progress in a year. We think it’s plausible but unlikely that both these conditions hold.

To get 30 years of progress in one year, either you need extremely large efficiency gains on top of ASARA (30 OOMs!) or a major paradigm shift that enables massive progress without significant increases in effective compute (which seems more likely).

We also consider two model variants, and find that this high-level takeaway holds in both:

Retraining new models from scratch. In this variant, some fraction of software progress is “spent” making training runs faster as AI progress accelerates. More.

Gradual boost. In this variant, we simulate a gradual increase in AI capabilities from today’s AI to ASARA, with software progress accelerating along the way. More.

Discussion

If this analysis is right in broad strokes, how dramatic would the software intelligence explosion be?

There’s two reference points we can take.

One reference point is historical AI progress. It took three years to go from GPT-2 to ChatGPT (i.e. GPT-3.5); it took another three years to go from GPT-3.5 to o3. That’s a lot of progress to see in one year just from software. We’ll be starting from systems that match top human experts in all parts of AI R&D, so we will end up with AI that is significantly superhuman in many broad domains.

Another reference point is effective compute. The amount of effective compute used for AI development has increased at roughly 10X/year. So, three years of progress would be a 1000X increase in effective compute; six years would be a million-fold increase. Ryan Greenblatt estimates that a million-fold increase might correspond to having 1000X more copies that think 4X faster and are significantly more capable. In which case, the software intelligence explosion could take us from 30,000 top-expert-level AIs each thinking 30X human speed to 30 million superintelligent AI researchers each thinking 120X human speed, with the capability gap between each superintelligent AI researcher and the top human expert about 3X as big as the gap between the top expert and a median expert.12

Limitations

Our model is extremely basic and has many limitations, including:

Assuming AI progress follows smooth trends. We don’t model the possibility that superhuman AI unlocks qualitatively new forms of progress that amount to a radical paradigm shift; nor the possibility that the current paradigm stops yielding further progress shortly after ASARA. So we’ll underestimate the size of the tails in both directions.

No gears-level analysis. We don’t model how AIs will improve software in a gears-level way at all (e.g. via generating synthetic data vs by designing new algorithms). So the model doesn’t give us insight into these dynamics. Instead, we extrapolate high-level trends about how much research effort is needed to double the efficiency of AI algorithms. And we don’t model specific capabilities, just the amount of “effective training compute”.

“Garbage in, garbage out”. We’ve done our best to estimate the model parameters, but there are massive uncertainties in all of them. This flows right through to the results.

This uncertainty is especially large for the “distance to effective limits” parameter.

You can choose your own inputs to the model here!

No compute growth. The simulation assumes that compute doesn’t grow at all after ASARA is deployed, which is obviously a conservative assumption.

Overall, we think of this model as a back-of-the-envelope calculation. It’s our best guess, and we think there are some meaningful takeaways, but we don’t put much faith in the specific numbers.

Structure of the paper

The full the paper lays out our analysis in more detail. We proceed as follows:

We explain how this paper relates to previous work on the intelligence explosion.

We clarify the scenario being considered.

We outline the model dynamics in detail.

We evaluate which parameters values to use – this is the longest section.

We summarise the parameter values chosen.

We describe the model predictions.

We discuss limitations.

Greenblatt guesses that the improvement in capability from 6 OOMs of effective compute would be the same as 8 OOMs of rank improvement within a profession. We’ll take the relevant profession to be technical employees of frontier AI companies and assume the median such expert is the 1000th best worldwide. So 8 OOMs of improvement would be 8/3 = 2.7 steps the same size as from a median expert to a top expert.

Minor caveat: The starting point in this example (“30,000 top-expert-level AIs thinking 30x human speed”) corresponds to a slightly higher capability level than our definition of ASARA. We define ASARA as AI that can replace every employee with 30x copies running at 30x speed; if there are 1000 employees this yields 30,000 AI thinking 30x human whose average capability matches the average capability of the human researchers. Rather than top-expert-level ASARA is mean-expert-level.